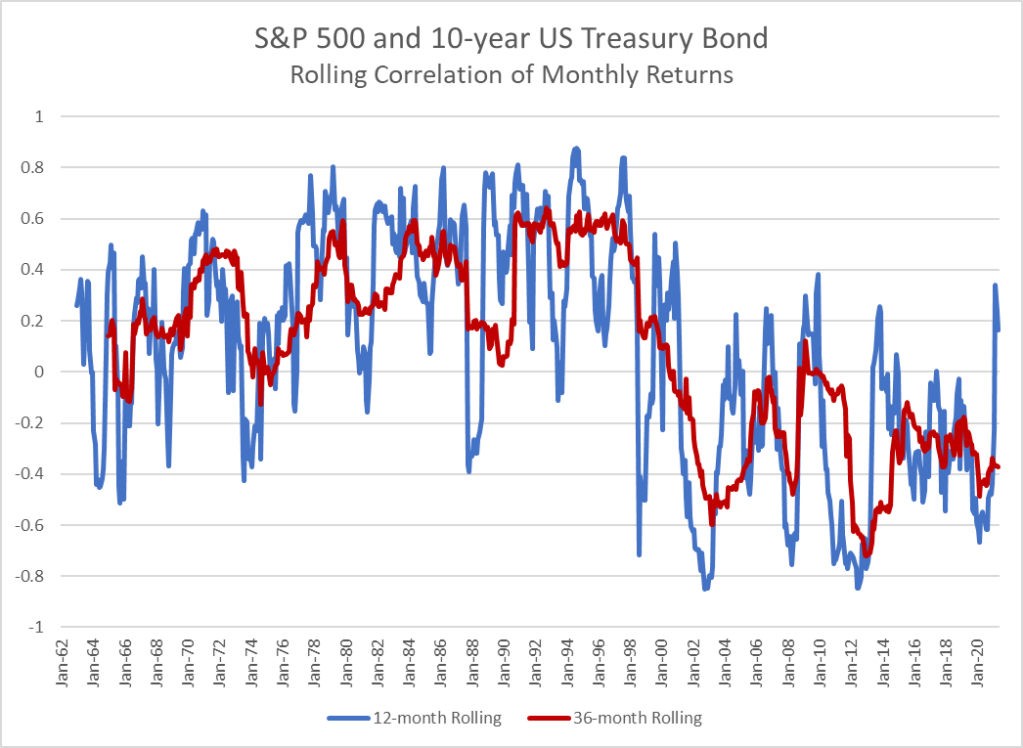

Financial theory requires correlation to be constant (or, at least, known and nonrandom). Nonrandom means predictable with waning sampling error over the period concerned. Ellipticality is a condition more necessary than thin tails, recall my Twitter fight with that non-probabilist Clifford Asness where I questioned not just his empirical claims and his real-life record, but his own theoretical rigor and the use by that idiot Antti Ilmanen of cartoon models to prove a point about tail hedging. Their entire business reposes on that ghost model of correlation-diversification from modern portfolio theory. The fight was interesting sociologically, but not technically. What is interesting technically is the thingy below.

How do we extract sampling error of a rolling correlation? My coauthor and I could not find it in the literature so we derive the test statistics. The result: it has less than odds of being sampling error.

The derivations are as follows:

Let and

be

independent Gaussian variables centered to a mean

. Let

be the operator.

\

First, we consider the distribution of the Pearson correlation for observations of pairs assuming

(the mean is of no relevance as we are focusing on the second moment):

with characteristic function:

where is the Bessel J function.

We can assert that, for sufficiently large:

the corresponding characteristic function of the Gaussian.

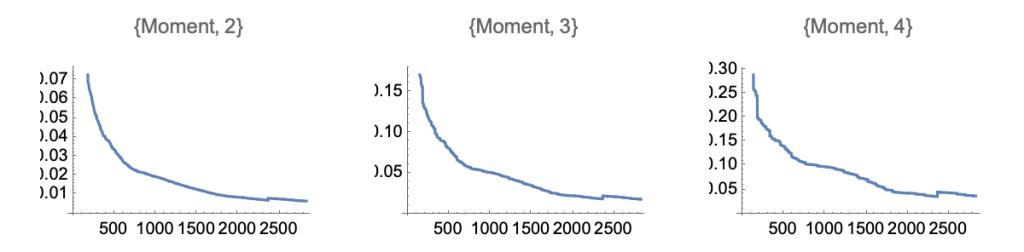

Moments of order become:

where is the Beta function. The standard deviation is

and the kurtosis

.

This allows us to treat the distribution of as Gaussian, and given infinite divisibility, derive the variation of the component, sagain of

(hence simplify by using the second moment in place of the variance):.

To test how the second moment of the sample coefficient compares to that of a random series, and thanks to the assumption of a mean of , define the squares for nonoverlapping correlations:

where is the sample size and

is the correlation window. Now we can show that:

where and

is the Gamma distribution with PDF:

and survival function:

which allows us to obtain p-values below, using observations (and using the leading order $O(.)$:

Such low p-values exclude any controversy as to their effectiveness cite{taleb2016meta}.

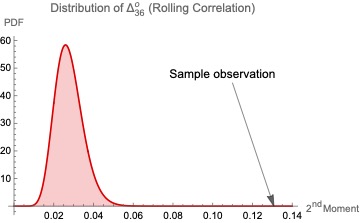

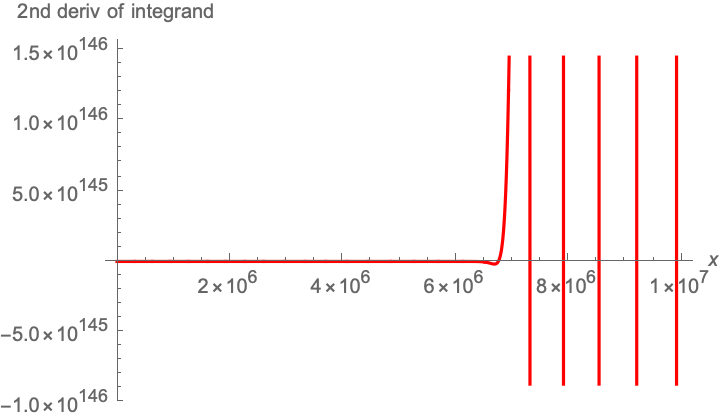

We can also compare rolling correlations using a Monte Carlo for the null with practically the same results (given the exceedingly low p-values). We simulate with overlapping observations:

Rolling windows have the same second moment, but a mildly more compressed distribution since the observations of over overlapping windows of length

are autocorrelated (with, we note, an autocorrelation between two observations

orders apart of

). As shown in the figure below for

we get exceedingly low p-values of order

.